During development of our new Snapper 9260 module, one of the requirements was for boot times (power -> prompt) of less than 6s. Since the (rather feature-rich) development system boot time was in the order of 45s, there was quite a bit of scope for improvement: - Enable MMU and data cache in U-Boot: by default, U-Boot does fairly minimal initialisation of hardware. We extended main board support and network driver code to support making full use of the instruction cache (easy) and data cache (a bit more interesting). Time saving: ~3s. - Use on-board storage for kernel and root filesystem, instead of NFS. Time saving: ~2s. - Rationalise U-Boot environment: remove the boot delay, flatten out dynamically-generated bootup scripts, use uncompressed image (or gzip-compressed image if space is a constraint) instead of self-decompressing image, disable redundant image verification. Time saving: ~2s. - Strip unneeded drivers out of Linux kernel: by removing unneeded systems (eg. networking, unneeded filesystems, debugging facilities, splash logo), we reduced the Linux kernel size from 3.6Mb to 1.8Mb. Time saving: ~1.5s, plus reduced kernel boot times. - Add 'quiet' to Linux boot arguments, suppressing synchronous printk output. Unlike removing printk support entirely, the bootup messages are still available via dmesg, supporting field debugging. Time saving: ~2s. - Preset loops-per-jiffy setting for Linux kernel. Time saving: ~0.25s. - Strip filesystem to avoid unneeded subsystems, slow init() scripts, minimise footprint. Time saving: ~30s. Overall, these changes allowed us to reduce the boot times for the unit to 5.2s - well within the 6s target - without sacrificing support for interactive access and field upgrades. We hope to gain another 1.2s back between power-on and start of execution by using a new revision of the AT91SAM9260 chip (an erratum on the 'A' revision silicon), which should bring overall time below 5s - not bad for a full-featured Linux device!

How will TI's OMAP3530 change things?Posted in Industry News on January 05, 2009 by Administrator We have just completed a design based around Texas Instrument's OMAP3530 microcontroller. This is a high-end chip with lots of CPU performance (2000 Dhrystone MIPS) and a built-in 6-core DSP. We have tied it together with 4GB of flash and 256MB of DDR memory and lots of graphics output features to make something which we think will have great application in the digital signage market. So far we have the platform playing MPEG4 video with an alpha-blended overlay. It looks very slick for such a tiny wee board, and we are not even beginning to use the full features of the platform. As the Open Source support for this chip grows, it is going to be interesting to see what develops. The key difference between an OMAP3530 and a desktop PC CPU like the Pentium 4 is the level of integration. The OMAP chip includes a DSP capable of H.264 4CIF resolution full duplex real-time encode and decode, something your average modern PC struggles with. Without the DSP, the OMAP would struggle too. The OMAP chip includes video scaling and rotation hardware, something delegated to the graphics controller in a PC. The OMAP chip includes a NAND flash controller for storage, rather than the SATA used in a PC - but my laptop has a solid state drive which actually includes NAND flash, so the SATA is really just getting in the way. The OMAP chip also has a built-in USB controller, 3D graphics acceleration, SD card controllers and a direct CMOS sensor interface. The PC's processor (Pentium or whatever) uses separate chips, boards or even products for each for these. So the interest question is how long will it take for the PC to head down the integration route? The OMAP CPU is fast enough (or nearly) for most modern PC requirements such as web, email and office documents. For a limited portable screen resolution of 1024x768 or so it puts across a good effort. It seems to me that at least the bottom of the PC market may one day be served by devices such as the OMAP3530 and its successors. As if on cue, Ubuntu and ARM annouced recently that they are working on an Ubuntu release for higher end ARM platforms. “The release of a full Ubuntu desktop distribution supporting latest ARM technology will enable rapid growth, with internet everywhere, connected ultra portable devices,” said Ian Drew, vice president of Marketing, ARM. “The always-on experience available with mobile devices is rapidly expanding to new device categories such as netbooks, laptops and other internet connected products. Working with Canonical will pave the way for the development of new features and innovations to all connected platforms.”The focus is of course portable devices. But I wonder whether in 5 years time we will be using anything else? PTP-USBPosted in Uncategorized on December 15, 2008 by Administrator

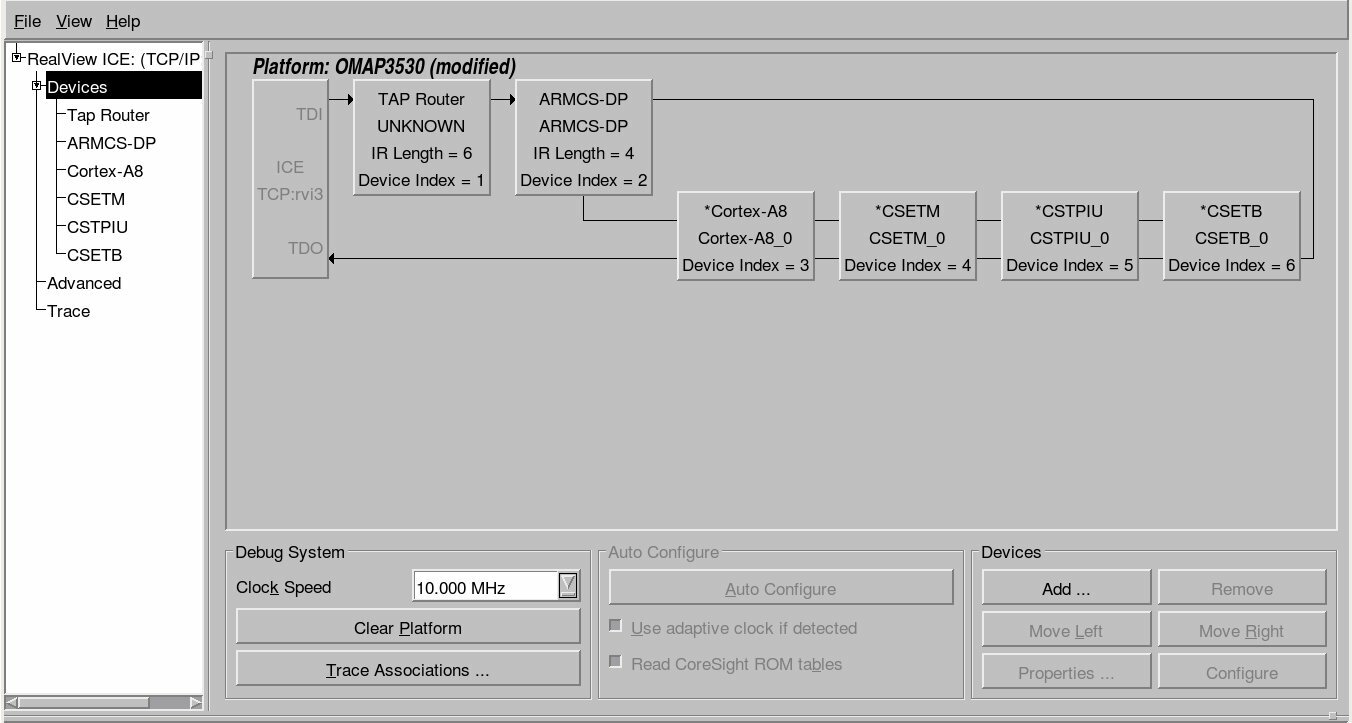

We are currently working on two camera-based products: our own Big-Eye security camera system and a small hand-held digital camera. Both of these cameras have the requirement of a user being able to connect to them from a desktop computer to manage and download the images. However, the primary connection method for Big-Eye is Ethernet, while the other camera uses USB. There is a standardised protocol called PTP (Picture Transfer Protocol) which has specifications for both IP and USB. PTP is a widely recognised standard. It is used by most consumer digital cameras and is supported 'out of the box' by most major operating systems. PTP allows users to download images (and other files) from a camera and delete unwanted images. It also supports camera properties which can be used to view and control the camera's date and time, exposure time, battery level, etc. In developing software support for PTP on our camera products we have written a generic, transport independent PTP layer, which implements each of the PTP commands and properties, and also contains code for managing the images on a camera. This generic layer calls transport specific (IP or USB) functions for sending and receiving data packets. The major benefit of this approach is that any new features, or bug fixes, we make to the generic PTP layer are automatically inherited by both the IP and USB cameras. Combining this PTP code with the image capture software develloped for Big-Eye (which includes featuers such as threaded image capture, Bayer conversion and JPEG encoding) we now have a full Linux-based software solution for consumer grade cameras. TI OMAP3 JTAGPosted in Uncategorized on December 11, 2008 by Administrator The new release of software (3.3) for the RealView ICE supports Texas Instruments' range of OMAP3 processors. An interesting feature of the OMAP3 family of processors is that the ARM Cortex-A8 core is not automatically added to the scan chain and cannot be auto-detected. Instead the OMAP3 uses a TAP router (JTAG Route Controller) that must be programmed with what is available on the chain. Luckily ARM has provided "templates" for the OMAP3 family that allow the user to select which processor they have connected to. The template provides the RealView ICE all it needs to know for easily connecting to the ARM core. An example of the OMAP3530 scan chain is shown below. Bluetooth and Wireless co-existencePosted in Uncategorized on December 04, 2008 by Administrator Bluetooth and Wifi (802.11b/g in particular) have two things in common: they're both increasingly ubiquitous in embedded devices, and they both use the 2.4 GHz frequency band. Although resident in the same part of the frequency spectrum, Bluetooth and Wifi use markedly different approaches to transmission management and collision avoidance. As a result, devices (or event localised areas) with both Bluetooth and Wifi active are prone to conflict and service degradation - in extreme cases, leading to wireless networking being completely unusable. The basic issue is that Wifi breaks down the frequency range into 14 channels, and transmits on a fixed channel. Bluetooth, on the other hand, hops between 79 channels at a rate of up to 1600 hops per second. When a Bluetooth transmission happens to coincide (in time and frequency) with a Wifi packet transmission, both transmissions may be lost. Due to the longer packet sizes, this has a far greater impact on Wifi transmissions, at worst leading to a cycle of packet loss, Wifi transmission rate dropping (thus increasing the risk of collision), and further packet loss - terminating in complete loss of Wifi connectivity. To address this problem there are a few basic approaches: 1. Limit inter-device interference via distance or shielding. By providing 25dB or greater isolation between antennas, the issue may be avoided entirely, but this is often not practical for small devices. 2. Activity signaling and time sharing. Many Wifi and Bluetooth modules provide signals indicating that transmission is currently in progress, or to defer non-critical transmissions. This avoids interference, since only one device is transmitting at any given point in time, but can adversely impact performance. 3. Channel signaling. Interference only occurs when both Bluetooth and Wifi attempt to transmit on the same frequency range, so notifying the Bluetooth module of what frequency slots to avoid during its hopping sequence can avoid interference with no loss of Wifi bandwidth, and minimal impact on Bluetooth. 4. Adaptive Frequency Hopping. The Bluetooth v1.2 standard outlines a mechanism for Bluetooth devices to automatically mark and avoid frequency slots that exhibit interference from other devices, this preventing interference for both Bluetooth and Wifi. Unlike the other solutions listed, this does not require special board-level support, but is only available where all devices in a network support it. These schemes typically rely on module extensions and require board-level support, and vary in terms of the types of interference they can address effectively. In particular, these solutions may be limited where there is broad-base interference or where communication between interference sources is impossible. A simple general solution is therefore not achievable, so care must be taken when doing board design and module selection to take these issues into consideration, and to address the specific coexistence needs of the product. |

Also be aware that TI uses a non standard 14 pin JTAG connector. If you need to connect any of the standard ARM JTAG tools to a TI (such as the OMAP3 family) processor, you will need a TI JTAG adapter such as the ARM provided HBI-0027B (

Also be aware that TI uses a non standard 14 pin JTAG connector. If you need to connect any of the standard ARM JTAG tools to a TI (such as the OMAP3 family) processor, you will need a TI JTAG adapter such as the ARM provided HBI-0027B (